Eyeware: Sponsor & Exhibitor at the Human-Robot Interaction (HRI) 2019, Daegu, South Korea

The Human-Robot International Conference aims to reunite the best researchers from all over the world and provide them with the opportunity of presenting their innovative projects. The Conference is focused on exchanging ideas about the latest technological developments in HRI. In an era governed by technological improvements, this annual encounter represents an invaluable opportunity to keep up with the latest findings in the field of HRI. More importantly, it inspires and supports multidisciplinary research.

# HRI’s 2019 Focus: Collaborative HRI

If last year’s convention from Chicago focused on “Robots for Social Good,” this year’s theme was “Collaborative HRI.”

Among the HRI Keynote Speakers, we highlight:

Kyu Jin Cho – distinguished Lecturer from the Seoul National University. He has been exploring novel soft robot designs, including a water jumping robot, origami robots, and a soft wearable robot for the hand, called Exo-Glove Poly, that received coverage by over 300 news media outlets.

Janet Vertesi – Post-Doctoral Fellow at Princeton University’s Society of Fellows in the Liberal Arts; Lecturer in Sociology. The majority of her research is on robotic spacecraft teams at NASA and how organizational factors affect and reflect planetary scientists’ activities and scientific results.

Jangwon Lee – Ph.D. candidate in computer music at the Music and Audio Computing Lab. He is a music researcher focusing on mobile music interaction, specifically how new interaction methods and constraints of mobile devices can be used in expressive computer music. He is also part of the Korean band peppertones, an active television entertainer, and a computer music researcher.

Gil Weinberg – Ph.D. in Media Arts and Sciences from MIT. His research focuses on developing artificial creativity and musical expression for robots and augmented humans. Human-robot interaction and music technology are his main focus for analysis and applications.

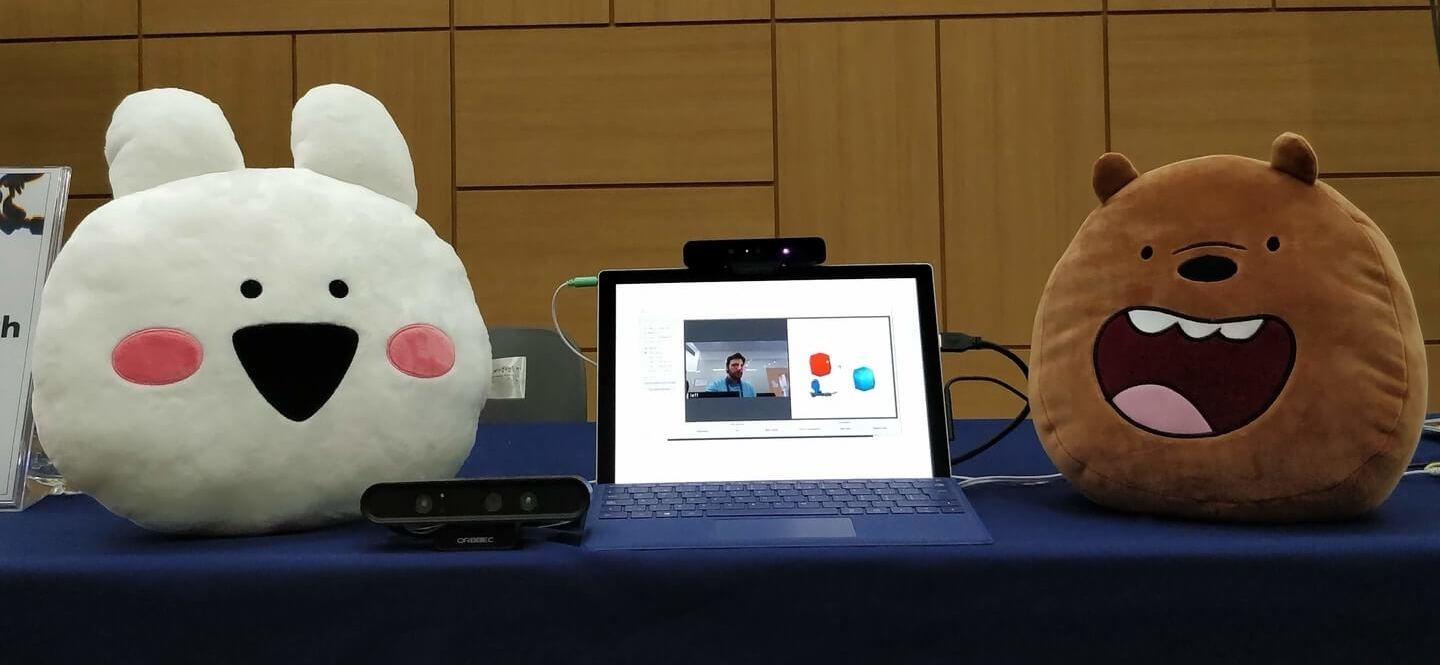

Human-plush toy interaction at the HRI2019 Conference, Eyeware’s Booth

At the International Conference on Human-Robot Interaction 2019, Eyeware showcased GazeSense, its 3D eye tracking software, to enable the interaction between humans and inanimate objects. By simply using gaze tracking, the visitors interacted with Extremely Rabbit and Grizzly Bear at the Eyeware booth.

Jung Kim (HRI 2019 Chairman) interacting with Grizzly Bear

As a silver sponsor of the HRI 2019, South Korea Conference, Eyeware actively participated in the event. The image below shows our colleague, Bastjan Prenaj, the company’s CDBO, delivering a demo on the Gaze Sense 3D software. When Bastjan directs his attention towards Extremely Rabbit, the latter starts interacting with him and requests Bastjan’s undivided attention. On the other hand, Grizzly Bear was feeling flirtatious at this Conference, and each time someone would direct their attention towards him, he tested Joey Tribbiany’s famous pickup line on them: #HowYOUdooin? If you’re wondering how this interaction between the stuffed toys and the audience is possible, read below about the functionality of Gaze Sense 3D, which made this scenario possible.

How does the Gaze Sense 3D work?

Gaze Sense 3D is an eye tracking software for depth-sensing cameras. The program can track attention towards objects of interest in an open space. For the above demo scenario, presented at the HRI conference, the software captured the visitor’s gaze and attention and used them to trigger interactive behavior by plush animal toys. Extremely Rabbit and Grizzly Bear “talked” when the user looked at them. Had the toys been replaced with other objects, the interaction result could have been the same.

Left Display: Bastjan, while using the GazeSense 3D gaze tracking feature

Right Display: 3D visualization of Bastjan gazing at the identified objects

Let us know what other 3D eye tracking perspectives or applied solutions you’d like to learn more about via an email to [email protected] or mention any of the social networks we are active on. Please find us on Twitter, Facebook, or LinkedIn.